#044 - Python Environments, Again | uv: A Guide to Python Package Management

A Streamlined Method to Get Up and Running with Jupyter Notebooks in VS Code

"Everything should be made as simple as possible, but not simpler."

– Albert Einstein

For the last year or so, I’ve been using Poetry for managing Python environments and packages, and it’s served me well. But in the spirit of continuous improvement and shiny-thing syndrome, I started experimenting with uv, a tool that promises to streamline these tasks even further. I’ve been playing with it for a couple of months now.

uv is an extremely fast Python package installer and resolver, written in Rust, and designed as a drop-in replacement for pip and pip-tools workflows.

To read more about the philosophy and development of uv, check out the developers blog post.

A major objective of Flocode is to simplify the path for engineers to start using Python. The topic of environment and dependency management is one of the most common and frustrating challenges I hear about from engineers who are new to Python.

I’ll guide you through a basic project setup with a Jupyter Notebook in VS Code, which is the most common way that I work with Python.

You can check also out this video tutorial which might be helpful to follow along with. This is my recommended way to set up Python for Engineering projects, allowing you to just get on with it.

** Since recording this, I’ve learned uv can also manage your Python installations, as opposed to pyenv-win which I mention in this video. Either way is good but moving forward I will be using uv for managing Python installations.

Nice to have everything under one roof but there are some complexities when opening up old projects.

Why uv Is Worth Exploring

Speed and Efficiency

According to the uv documentation, one of its standout features is its speed. It claims to significantly reduce package installation times, with performance reportedly up to 100 times faster than pip. You can check out the impressive benchmarks here.

This potential for speed is interesting but it’s not really a factor for me, my projects are rarely complex enough for this to be an issue.

Most of the time, I have raw data and a few jupyter notebooks or scripts.

But uv is noticeably faster than other options (Pip, Poetry) which is never a bad thing.

Disk Space Efficiency

Another feature that uv emphasizes is its approach to disk space management, similar to how pnpm operates in the Node.js ecosystem. uv uses a global cache to minimize redundancy, ensuring that identical packages are stored only once, regardless of how many projects require them. This approach theoretically keeps your system from getting bogged down with duplicate packages across projects.

This is something that always annoyed me. Why do I need to reinstall the same packages into individual virtual environments, resulting in unnecessary disk filling duplication? Well I don’t anymore, which is a real treat.

Certain tools like ipykernel still need to be installed in each environment to ensure proper integration with Jupyter Notebooks. So far, this setup is working really well for me. I can fire up new projects very quickly and I feel like there’s less time finessing things than with Poetry.

Using uv to handle Python installations is another useful feature. I was using pyenv-win but uv has removed that necessity. It’s a little different in how it works but I love the simplicity. Basically, uv checks your project configuration file (created automatically when you initialize a project, it ends in .toml) and installs the necessary python version for you. If you already have the required python version installed, great, it recognizes it and avoids duplication.

Cross-Platform Compatibility

While most engineers primarily work in Windows, the cross-platform support in uv—covering macOS, Linux, and Windows—is still worth noting. This feature ensures that if you ever need to switch environments, whether for a specific project or collaboration, your setup remains consistent. Although I work in Windows, it’s reassuring to know that uv can handle other platforms, if needed. So far, my experience with it has been very smooth.

Some developers have noted problems when transferring projects between Windows/Mac and Linux but if you’re a structural engineer out there working on a Mac, put down your artisanal croissant and get a grip of yourself.

Virtual Environment Capabilities

One of my favorite features of uv is how fast and easy it is to create virtual environments.

uv venvWith this command, you can set up an isolated environment in seconds, ensuring that your project's dependencies stay neatly separated from others. uv also re-uses packages from a global cache, so that common dependencies are installed instantly, further speeding up the setup process. By default, VS Code automatically recognized your venv and syncs with your project. It’s a small thing but it’s a very slick quality-of life feature.

By leveraging cached packages, uv not only saves time but also reduces redundancy across projects. This is a slick feature.

Sometimes something is not working quite right so you might want to delete the .venv folder, and simply recreate it. Super easy and fast.

If needed, you can also specify a custom venv name (e.g. my-env) or Python version (e.g. 3.12.4). I rarely need to do this, I just stick with ‘venv’ as the name.

uv venv my-env --python 3.12.4Syncing Your Environment with uv sync

The uv sync command is designed to keep your virtual environment aligned with your project's dependency requirements. When you run:

uv syncuv compares the current state of your environment against the dependencies specified in your requirements.txt or pyproject.toml files (I prefer to work with pyproject.toml). It then performs the following actions:

Installation: Installs any missing dependencies that are listed in your requirements but not yet present in your environment.

Upgrades/Downgrades: Adjusts the versions of existing packages to match the versions specified in your requirements. This ensures that your environment is using the exact versions you’ve defined, which is crucial for avoiding unexpected behavior due to version mismatches.

Uninstallation: Removes any packages that are installed in the environment but not listed in your requirements, keeping the environment clean and free from unnecessary bloat.

You can check out a detailed list of uv commands here. This gives you a sense of what you can do.

Step-by-Step Tutorial: Setting Up a Jupyter Notebook Using UV

This is no-nonsense tutorial to get you up and running with Python, locally on your machine in a way that can start doing real engineering work via a Jupyter Notebook.

This is how I start 80% of my projects.

This tutorial skims over some important aspects of Python project setup but the main objective here is to get you started. You can learn the ins and outs as you gain comfort.

There are a few steps here that might seem obtuse and unfamiliar, don’t worry. As you gain experience you will understand how the PowerShell and command prompt can be extremely powerful tools to increase your efficiency.

1. Install PowerShell

If you haven’t already, install the latest version of PowerShell. See instructions here.

In the tutorial, I’m using Version 7.4.5. Just a warning - this could take a few mins, it can be slow.

Open up the windows command prompt and run:

winget install --id Microsoft.PowerShell --source winget2. Install uv and Use it to Manage Python

I recommend a clean installation for ease of use so assuming Python is not installed:

Using PowerShell, install

uv:

Invoke-WebRequest -Uri https://astral.sh/uv/install.ps1 -OutFile install.ps1; powershell -ExecutionPolicy Bypass -File ./install.ps1Now that uv is installed, you can use it to install and manage Python:

To install the latest version of Python, run:

uv python installYou can also install a specific version like this

uv python install 3.12.6.Verify the Python installation by checking the version:

uv python --versionAfter this, uv will manage your Python installations.

Setting Up a Python Project with uv

This is a basic setup that I use for data analysis and engineering calculations in a Jupyter Notebook through VS Code.

When you initialize a project with uv, it often creates a default src directory to encourage a clean and modular project structure. This directory is intended to house your main Python code, helping to avoid potential import issues and separating your application logic from other files like configurations, data, and documentation.

While it's fine to keep small projects or notebooks in the root folder, organizing your code within a src directory is a best practice, particularly as projects grow in complexity.

Most of my projects have notebooks in the root folder with supporting data organized in sub-folders like (data, reports, drawings, results etc.).

Step 1: Create a Project Folder and Initialize with uv

Create a Project Folder: First, create a new folder where you want to store your project files. This folder will be your project directory. You can do this by using File Explorer, or directly in PowerShell with a command like:

mkdir flocode_lean_startupNavigate to Your Project Folder: After creating the folder, move into it using PowerShell:

cd flocode_lean_startupInitialize the Project with

uv: In your project folder, initialize the project with:

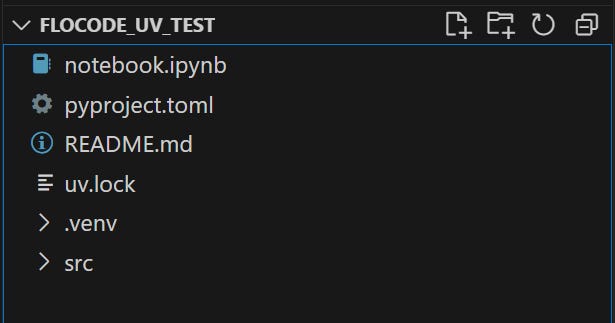

uv initThis command creates a default project and sets up the necessary structure to manage your Python dependencies, it includes:

flocode_lean_startup/

│

├── pyproject.toml # Main config file for dependencies and settings

├── README.md # Project description and documentation

└── src/ # Main source code directory

└── __init__.py # Initializes src as a Python packageIf you want you can now create and activate a virtual environment by simply running:

uv venvActivate your environment with:

.venv\Scripts\activateA nice feature is that if you haven’t created a virtual environment, uv automatically creates one as soon as you add any dependency to your project. The .venv folder is automatically added to the root folder of your project, making it easy for VS Code to find it and recommend it as your preferred virtual environment. This removes extra steps, I really appreciate this, one less hassle.

Step 3: Add Your First Package (ipykernel)

With your project initialized, you can now add the ipykernel package, which is essential if you plan to work with Jupyter Notebooks:

uv add ipykernelNext, install any additional packages you need for your project. For example, you might want to add pandas for data manipulation:

uv add pandasEach uv add command adds the specified package to your virtual environment, making it available for use in your project.

Step 4: Installing from an existing .toml file

If you have an existing project with a .toml file (e.g., a flocode project from GitHub), you can easily install dependencies by running:

uv syncThis command installs or updates all dependencies specified in the pyproject.toml and uv.lock files into the project's virtual environment, ensuring your environment matches the declared dependencies.

Step 5: Create a Jupyter Notebook File

Once your packages are installed, you can create a new Jupyter Notebook file to start working with your data:

Create a Notebook File: In VS Code, go to

File > New File, name itnotebook.ipynb, and save it in your project directory.Open the Notebook: Open this file in VS Code. You’ll be able to write and execute Python code within this notebook.

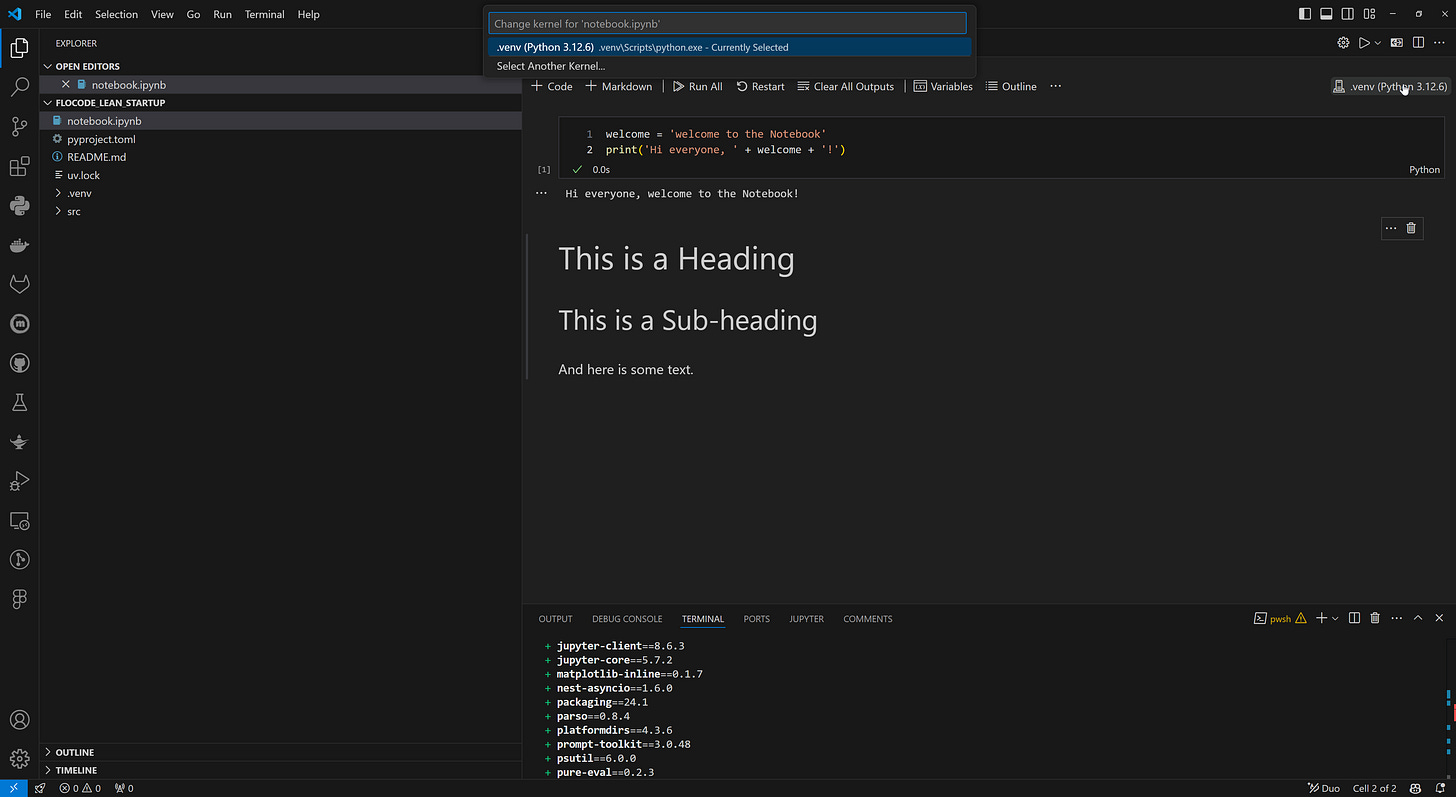

Step 6: Select Your Virtual Environment in VS Code

After creating the notebook, ensure that it’s using the correct virtual environment:

Open the Kernel Selector: In your notebook file in VS Code, look for the kernel selector in the top right corner of the notebook interface.

Select Your Environment: Click on the selector and choose the virtual environment associated with your project (typically

.venv). This ensures that the notebook uses the packages and Python interpreter from your project’s virtual environment.

Step 7: Build Things

Now you’re good to go, add or remove dependencies as you need to. You can also modify the pyproject.toml file to add license info or a project description. Another useful command is:

uv pip freeze > requirements.txt This command will generate a requirements.txt file that lists all the installed packages in your active environment, along with their versions. This file can then be used to recreate the environment on another system or for documentation purposes.

I usually use the pyproject.toml files but many people like to use a requirements.txt file.

Conclusion

So far, my experience with uv has been very positive. It’s already replaced Poetry in my toolbox. Yes, there might be some headaches and memory jogging when I go back to old code but I’m experiencing less setup headaches and focusing more on my work - this is extremely valuable.

The speed and efficiency are impressive. I’m waiting for an inevitable slap in the face but it hasn’t happened yet.

I’ll continue to experiment and share my findings. For now, if you’re curious and looking for ways to optimize your environment management, I recommend experimenting with uv.

As always, I’m open to feedback and interested in hearing if anyone else has tried uv.

How do you like to manage your Python projects?

See you in the next one!

James 🌊